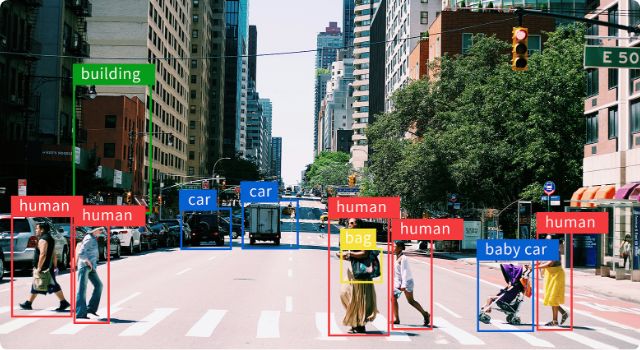

DETR-ResNet101 is an advanced object detection model that integrates the capabilities of the Detection Transformer (DETR) with the robust feature extraction power of the ResNet-101 backbone. Introduced by Facebook AI Research, DETR revolutionizes traditional object detection frameworks by eliminating the need for many hand-designed components like anchor boxes and non-maximum suppression. Instead, it leverages a transformer architecture to perform end-to-end object detection, simplifying the pipeline and enhancing performance. The ResNet-101 backbone plays a crucial role in DETR-ResNet101 by providing deep and rich feature representations through its 101-layer deep convolutional neural network. This allows the model to effectively capture intricate patterns and details within images, contributing to higher accuracy in detecting and classifying objects across various scales and contexts. DETR-ResNet101 excels in handling complex scenes with multiple objects, offering superior performance in terms of precision and recall compared to traditional detectors. Its end-to-end training paradigm facilitates easier optimization and integration into diverse applications such as autonomous driving, video surveillance, and image analysis. By combining the strengths of transformers and deep residual networks, DETR-ResNet101 sets a new benchmark in the field of computer vision, pushing the boundaries of what is achievable in object detection tasks.

Source model

- Input shape: 480x480

- Number of parameters: 57.66M

- Model size: 232.5M

- Output shape: 1x100x92, 1x100x4

Source model repository: DETR-ResNet101

The model performance benchmarks and inference example code provided on Model Farm are all based on the APLUX AidLite SDK

SDK installation

For details, please refer to the AidLite Developer Documentation

- Install AidLite SDK

# install aidlite sdk c++ api

sudo aid-pkg -i aidlite-sdk

# install aidlite sdk python api

python3 -m pip install pyaidlite -i https://mirrors.aidlux.com --trusted-host mirrors.aidlux.com

- Verify AidLite SDK

# aidlite sdk c++ check

python3 -c "import aidlite; print(aidlite.get_library_version())"

# aidlite sdk python check

python3 -c "import aidlite; print(aidlite.get_py_library_version())"

Inference example

- Select your target device, backend and model precision in the Performance Reference on the right

- Click Model & Test Code to download model files and inference codes. The file structure showed below:

/model_farm_{model_name}_aidlite

|__ models # folder where model files are stored

|__ python # aidlite python model inference example

|__ cpp # aidlite cpp model inference example

|__ README.md